Sonic gnarl

This page collects together results of training SampleRNN networks on a diverse set of sounds, with audio sampled at various stages of training. SampleRNN had some buzz around it in 2017, since it was capable of generating a sort of "babble" from whatever sounds you trained it on, whereas other "audio deep learning" software did not work at all.

Audio datasets are usually large and homogenous. Often you'll find networks trained on long audiobooks, or piano recordings from a single composer. My experiments here all focus on coaxing SampleRNN to learn from much shorter sounds (see list of datasets). Using data augmentation, I was able to generate networks using a couple minutes of audio, rather than several hours worth, and rather than use low-resolution audio, I trained the networks at CD quality (44.1 kHz).

Under these conditions, the sounds that worked well shared "gnarly" or chaotic qualities, where they were seemingly information-rich, but also sonically coherent. Let's listen to some examples:

textures

snaps kuschel saw porto sparse bass evol sundae clapping room tone lip noise

classifications

To understand the networks, I classified them according to how well they produced interesting output. To each network I also assigned a score from 1-6, with 6 being the most exceptional. The best networks were rhythmic and gnarly, but the worst could be hellish, windy, or even silent; mediocre networks just get stuck in a muffled drone.

silence wind tones voice rhythmic gnarly garbled hellish

The output reflects SampleRNN's process of statistically-controlled feedback, where a window of previous samples dictates how the waveform should move. Thus some balance is required in the original sound in order to get a good output: a mix of silence, transients, tones, and noise. The best results exhibit an impulsive relabi that showcases a particular scale of rhythm, chaotic attractors within the network that converge toward self-similarity without sounding boring or repetitive.

pop material

In consideration of these textures I also sought out examples from existing music to deconstruct using this synthesis technique. A larger point about the heresy of training data is perhaps mediated by my own personal relationship to these sounds, which offered me, through familiarity with the training data, a sense of what the network was doing to it.

autechre steve reich jlin susumu sunra neural acid

Any existing sound - real, recorded, or synthesized - obeys a certain temporal logic that is disrupted by the generator's timeblind statistical mimicry. One can hear a flattening of the source sound, from a sonic event in time, into a fourth-dimensional shape that is traced by the network. There is also a semiotic question: what does it mean for something to sound like it was "made by A.I." when the whole point is to create a convincing replica?

my melodies

Finally I give some examples of my own melodies which I put through. Prior to the modern synthesizer renaissance I mainly wrote things in a DAW, so these sounds were originally written in a piano roll using virtual analog or FM synthesis, as opposed to the sample-based material that is mixed in above.

axial6 clouds fm9 fm6 pc1 quick3 trecento

spawn at gropius bau

On 6 April 2018, Holly and her ensemble gave a performance at Martin Gropius Bau, Berlin, consisting of a set of "training rituals" for her AI baby, Spawn. Part of the concept was bringing group activity into the networks - finding complex sounds we could only make with a crowd of people.

We took audio from these performances and ran it through SampleRNN, which two weeks later debuted the ISM hexadome production SPAWN TRAINING CEREMONY I DEEP BELIEF. A call-and-response hymn performed that night can be heard on Evening Shades (Live Training). The neural audio here sometimes gives the character of electronic voice phenomena - hearing voices that seem to emerge from static.

holly talking jingling keys crowd finger snaps applause tapping bottles screaming responses snapping 2 clapping 2

what do you hear in these sounds?

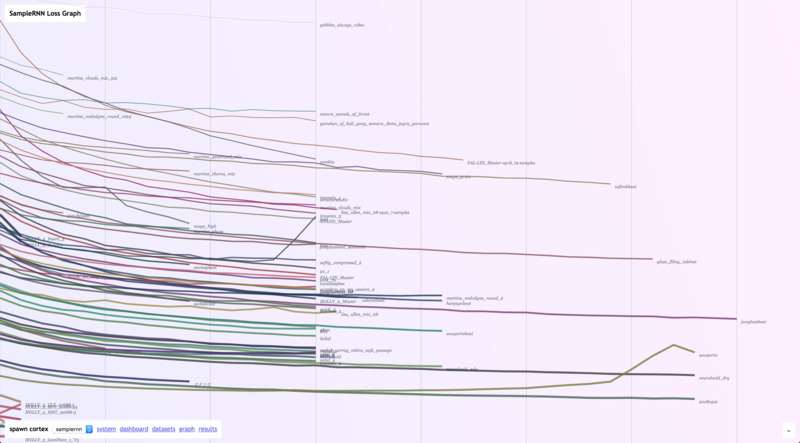

To train these SampleRNN networks, I started with a single sound 2-3 minutes long, then made several copies which were all slightly timestretched, to make my dataset. A single training pass against this dataset was called an "epoch". After each epoch, I generated 5 samples from the network, each one around a few seconds long. This gave me about 30 seconds per network, which was usually enough to tell if training was going well or not.

In some of the poorer training, you can hear things that the network gets stuck on. Silence, tones, and noise: in a way, all sounds are a combination of these three components. From a noisy transient, you can identify a sound, which decays into resonant, harmonic tones, and finally decays into silence. The stochastic nature of SampleRNN means there's always a chance you'll slip through a statistical crevice into a burst of noise. Overtraining leads to senility, where one trait dominates - endless noise, endless silence.

Likewise, at a small scale, sounds blur together. Given a piece of a waveform, which way will it move? SampleRNN uses larger time structures to control finer ones, which then condition a sequence generator that actually outputs the waveform. Audio fidelity is defined by sample rate and bit depth. Bit depth is fixed at 8 bits (256 possible positions in the waveform), but sample rate can be increased for higher "fidelity" at the expense of large scale structure.

When sounds come out of a statistical generator, are they really sounds anymore? Samples are related by context, but sounds unravel into higher dimensions. Stripped of any referent, they are heard the first time we listen to them. But what is this low-resolution space? Do you think that's reverb you're hearing?

You hear the data you put in, but the sound is of the network itself.

Back to index